Recently an interesting paper has been published in ArXiV:

Gamaleldin F Elsayed, Shreya Shankar, Brian Cheung, Nicolas Papernot, Alex Kurakin, Ian Goodfellow & Jascha Sohl-Dickstein 2018. Adversarial Examples that Fool both Human and Computer Vision. arXiv:1802.08195.

However, maybe even more interesting is the lively debate on it via the 2-minutes papers by Karoly Zsolnai-Feher:

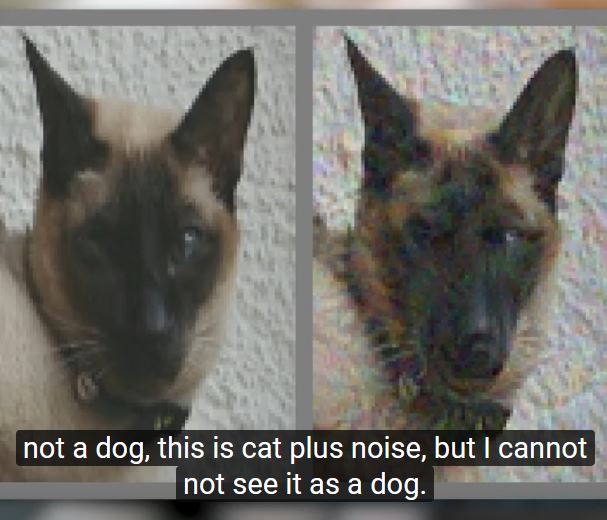

The word adversarial – adversary (in German: nachteilig, ungünstig, gegnerisch) comes from Latin adversarius – advers(us), hence adverse, and means unfavourable, antagonistic in purpose, effect, interest, etc. – Synonyms are opposed or adversative; Antonyms are supportive, allied, assisting; adversarial examples include input data to AI/ML models (see here the differences of Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning (DL) ) which are deliberately used to cause malfunctions. For research purposes adverarial examples are excellent test data for checking the robustness of a model – here also a very good paper (having 767 citations as of today (April, 6, 2018):

Ian J. Goodfellow, Jonathon Shlens & Christian Szegedy 2014. Explaining and harnessing adversarial examples. arXiv:1412.6572.